Bankable, investable, increasingly tradable: Spotlight on Silver Birch post Tradeteq acquisition

Deepesh Patel

Jun 27, 2025

Carter Hoffman

Jun 27, 2025

Carter Hoffman

Jun 27, 2025

In October 2024, an employee at a multinational firm received a video call from what appeared to be her chief financial officer and other senior colleagues. They instructed her to urgently transfer funds for a “secret transaction”. Over 15 transactions, she sent about HKD 200 million (USD 25 million) to the fraudsters, only later discovering the colleagues on the call were AI-generated deepfake avatars imitating her real executives.

This alarming incident shows us that artificial intelligence (AI) has created new avenues for financial fraud, and the trade finance industry must take notice. As part of the Banker’s Association for Finance and Trade (BAFT) Future Leaders Program, the AI Team (Abigale Ning from Standard Chartered, Adel AlZarooni from First Abu Dhabi Bank, Carmen Landeras Cabrero from Banco Santander, Carmen Maria Ramirez Ortiz from ADB, Erik Rost from Citi, Heidi Pun from ANZ Bank, Oskar Nordlander from SEB, and William Rattray from JP Morgan) published their research paper ‘Identifying and Countering New AI Threat Vectors in Trade Finance’. The paper warns that AI tools can erode traditional safeguards and lower the bar for criminals to launch sophisticated scams.

Based on the findings from their paper and presentation at the BAFT Global Annual Meeting in Washington in May, this article examines the emerging threat of AI-generated fraud in trade finance and explores how prepared the industry is, how regulators are responding, and what must happen next to counter these risks.

AI is supercharging age-old fraud tactics, giving rise to new categories of threat vectors. Trade, treasury, and payments professionals should be aware of how these AI-fueled schemes work:

Deepfake Impersonations: AI can create ultra-realistic synthetic video or audio of real people (“deepfakes”), allowing criminals to impersonate executives, clients, or officials with chilling accuracy. In the Hong Kong case above, scammers used AI avatars to mimic a CFO’s face and voice on a live call, enough to convince a wary employee to approve huge payments. The OECD has cautioned that generative AI is difficult to distinguish from human-generated content in 50% of cases, which makes phishing and voice scams far more convincing and harder to stop.

Synthetic Identities and Fake Personas: Beyond mimicking known individuals, AI enables the creation of entirely fictitious people or companies that appear legitimate. Fraudsters can generate fake passports, IDs, corporate profiles, even realistic faces – fabricating synthetic identities that pass cursory Know-Your-Customer checks. The UN Office on Drugs and Crime reported in 2024 that criminal syndicates in Asia are already leveraging generative AI and deepfakes to bolster their fraud operations. In trade finance, a fraudster might spin up a shell import-export company complete with AI-generated incorporation documents, a professional-looking website, and bogus director identities (including photos and voice recordings) to open bank accounts and secure financing.

Document Forgery at Scale: Trade finance runs on documents, and AI now has the ability to fabricate all of them with astonishing fidelity. With generative AI models, a fraudster can conjure up fake documents that are largely indistinguishable from legitimate ones. In one imagined scam lifecycle, AI could generate an entire set of shipping documents (e.g. packing lists, quality certificates, even tracking data) to “prove” a fictitious shipment, leading a bank to honour a letter of credit for goods that never existed. Since banks, under rules like UCP 600, deal in documents, not goods, a portfolio of flawless forgeries presents a serious challenge to traditional verification processes. While banks do perform checks and balances to spot fraud, the sheer volume and quality of AI-fabricated paperwork can strain those defences.

AI-Augmented Social Engineering: Classic fraud techniques such as phishing emails can be supercharged by AI. Language models can draft highly persuasive, bespoke phishing emails in perfect business language, tailored to recipients using scraped data. For instance, an AI might instantly comb public databases for a target company’s trading partners and craft fraudulent messages that mimic those partners’ style and context. This digital social engineering often works hand-in-hand with deepfakes and forgeries. For example, an AI-written email might coax a victim onto a deepfake video call, or a spoofed chat might be used to verify fake documents.

The uncomfortable truth is that much of the trade finance industry is not yet ready to confront these AI-enabled threats.

Many banks and corporates still rely on legacy processes and siloed information, leaving gaps that savvy fraudsters can exploit. In trade finance, transaction data is scattered across banks, shippers, insurers, and buyers, with no single party seeing the full picture. Verification often depends on paper documents and trust between parties, a system that AI-generated fakes can undermine. Moreover, large portions of the industry continue to use PDFs, emails and Excel spreadsheets as mainstays of processing. This outdated ecosystem, slow to adopt structured data and automation, provides fertile ground for deception.

Trade finance has been digitalising, but unevenly, even though bodies like the ICC have introduced electronic document frameworks, uptake has been patchy, which means the sector’s technological defences haven’t kept pace with fraudsters’ technological offences.

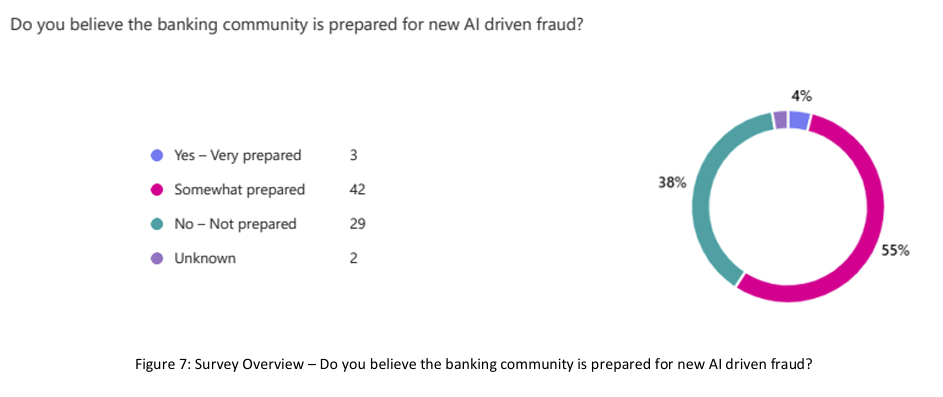

A survey of trade finance professionals in the BAFT Future Leaders paper illustrates the readiness gap. When asked if the banking community is prepared for AI-driven fraud, only 4% of respondents felt banks were “very prepared,” while 38% said the industry is “not prepared”. Just over half gave a lukewarm “somewhat prepared” rating.

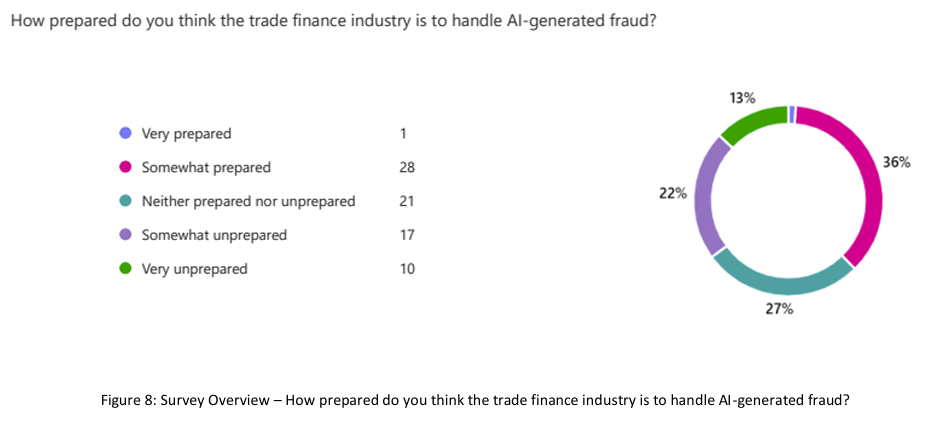

Specifically in the trade finance sector (as opposed to general banking), nearly half of respondents described the industry as either somewhat or very unprepared to handle AI-generated fraud. Only a tiny fraction (around 1%) believed trade finance was very prepared.

This lack of confidence is telling. It likely stems from the highly transactional nature of the trade finance business, involving many participants (some of whom are outside the banking system). Such characteristics make it harder to deploy new technological safeguards across the board.

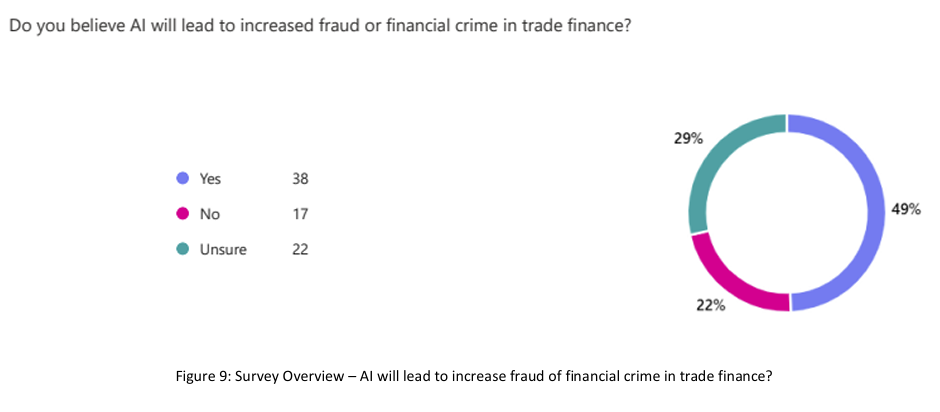

The survey also revealed that professionals overwhelmingly expect AI to increase fraud risk in the near future. Almost half (49%) of respondents believe AI will lead to more fraud and financial crime in trade finance, versus only 22% who think it won’t. When asked which areas are most vulnerable, the top answers were “deepfakes and impersonation” and “document forgery,” confirming that industry insiders see those as the clearest and present dangers.

This perception aligns with external analyses. Deloitte, for example, predicts that generative AI could drive financial fraud losses as high as $40 billion in the US alone by 2027 (up from $12.3 billion in 2023). In other words, the threat is expected to grow dramatically, yet current preparedness remains low. Bridging this gap is now an urgent priority for the trade, treasury, and payments community.

Regulators and international organisations have started to wake up to the double-edged nature of AI in finance.

The European Union has been crafting the AI Act, a sweeping law to govern AI applications. The draft AI Act uses a risk-based approach where certain AI uses in finance (such as credit scoring models) would be deemed “high-risk” and subject to strict requirements on transparency, oversight and data quality. At the same time, policymakers recognise that using AI for fraud prevention is part of the solution. In fact, the European Parliament has proposed that AI systems deployed to detect fraud in financial services should not be considered high-risk under the Act, to avoid hampering innovation in anti-fraud technology.

Beyond specific AI laws, financial regulators and law enforcement agencies are issuing guidance on emerging fraud typologies. For example, the US Treasury’s FinCEN in 2024 warned banks to be vigilant about deepfake identities being used to open accounts, and the FBI and others have flagged AI-generated content as an increasing component of cybercrime.

In response, financial regulators in major markets are pushing banks to strengthen verification processes and controls to account for AI-generated fakes. Industry groups are urging greater information-sharing about new scam techniques. Even the cybersecurity domain is pivoting to focus on deepfake detection and authentication technologies. There is also movement on standardising digital trade documentation and leveraging blockchain or other tamper-evident systems, which could make it harder for fake documents to go unnoticed.

For instance, the International Chamber of Commerce’s Digital Standards Initiative (DSI) and other bodies have rolled out frameworks for electronic bills of lading and trade APIs; if widely adopted, such measures can reduce reliance on easily falsified paper records.

Governments, for their part, are updating legal definitions of fraud and identity theft to encompass synthetic media and AI-generated content, ensuring that perpetrators of these novel schemes can be prosecuted. Still, aligning global regulatory responses will take time, and time is a luxury the industry may not have as criminals accelerate their use of AI.

Every stakeholder in the trade, treasury, and payments ecosystem has a role to play in fortifying against the threats of AI-driven fraud. Banks and financial institutions should invest in advanced fraud detection systems that use AI against AI. Such tools, however, are not a silver bullet and must be complemented by process and people resilience.

The BAFT Future Leaders paper stresses that “the trade finance ecosystem must continue to prioritize process resilience, cross-sector collaboration, and education. As AI becomes more sophisticated… it can exploit weaknesses in existing fraud prevention technology more efficiently and at a lower cost.”

On a strategic level, the industry should push for secure digitalisation of trade flows. Embracing digital document systems with robust authentication can close off avenues for document forgery, while reducing reliance on email for key communications in favour of more secure platforms that can mitigate phishing.

If a deepfake can fool an employee into wiring USD 25 million, it can fool a trade desk, a compliance officer, or a bank’s fraud filter. The counter-fraud toolbox needs an upgrade for the AI era, and the longer organisations delay, the more opportunity criminals have to hone their AI-assisted techniques. Trade finance has weathered fraud threats for centuries; with foresight and collective action, it can overcome this new AI-generated menace as well.

Deepesh Patel

Jun 27, 2025

Trade Treasury Payments is the trading name of Trade & Transaction Finance Media Services Ltd (company number: 16228111), incorporated in England and Wales, at 34-35 Clarges St, London W1J 7EJ. TTP is registered as a Data Controller under the ICO: ZB882947. VAT Number: 485 4500 78.

© 2025 Trade Treasury Payments. All Rights Reserved.